Concentration Inequalities

Concentration inequalities are used to bound the deviation of a random variable from some number, and they show up everywhere. The treatment here closely follows Chapter 2 of the excellent book High Dimensional Probability, by Vershynin. I have added some intuition, solved exercises, and included some simulations that I felt to be enlightening.

The motivating question will be the following: Consider a coin flipped $N$ times. What is the probability of observing $\frac{3}{4}N$ heads? We will find that the probability decreases exponentially with $N$.

Central Limit Theorem

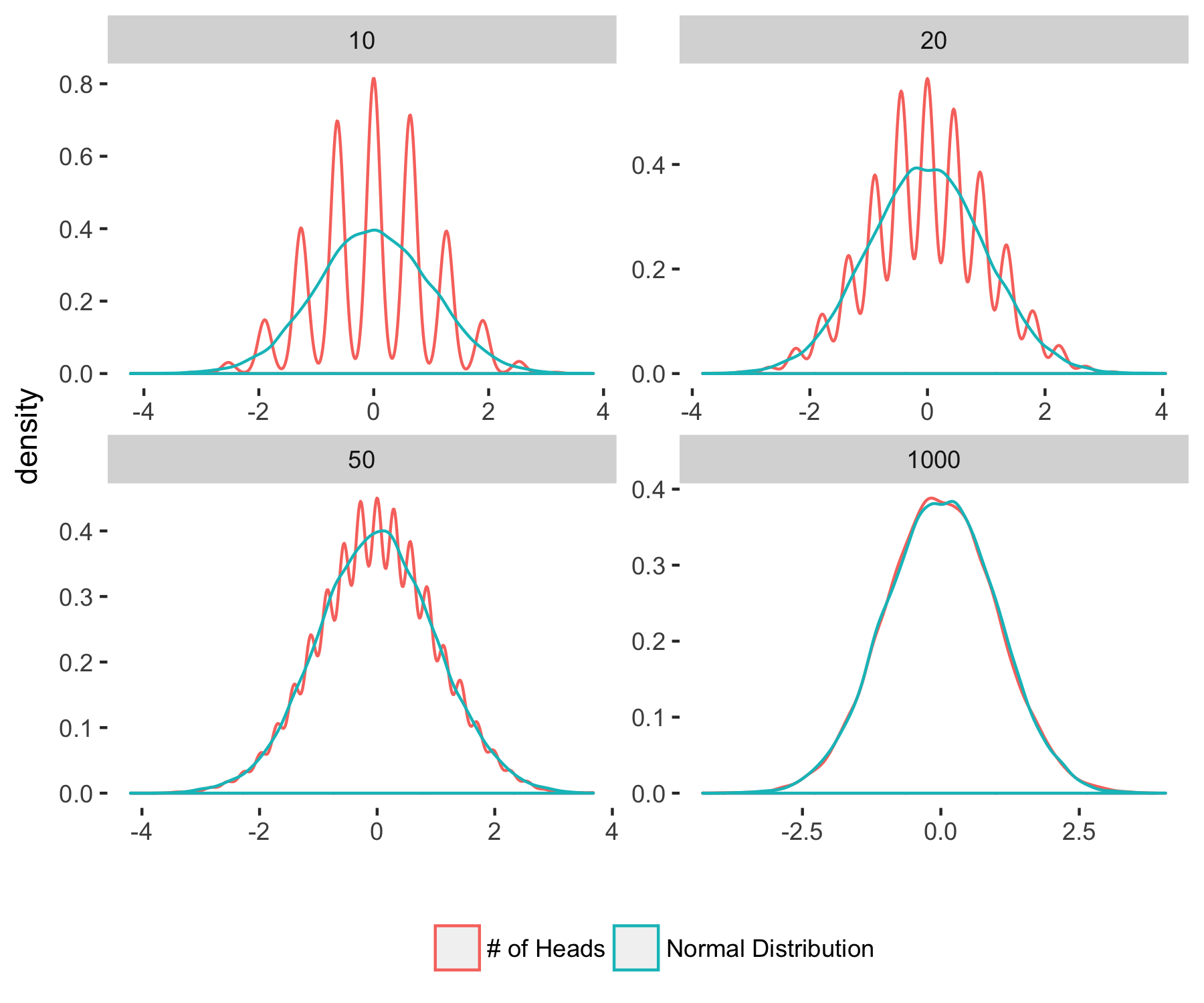

First, let’s discuss what the central limit theorem (CLT) says about our coin-flipping experiment. Denote the outcome of the $i$th coinflip as the Bernoulli random variable $X_i$ which takes the value 1 if the outcome is heads and 0 if it is tails. Denote the number of heads in an experiment with $N$ tosses as $S_N = \sum_i X_i$. The CLT (in this case, the de Moivre-Laplace theorem) states

where $Z_N = \frac{S_N - Np}{\sqrt{Np(1-p)}}$ is simply the number of heads centered and rescaled. That is, the distribution of the number of heads in our experiment will approach a normal distribution as the number of coin tosses $N$ goes to infinity.

Let’s check it out in R:

ns = c(10,20,50,1000) #Number of coin flips

p <- 0.5; # It's a fair coin

test_size <- 10000; #Number of times we will simulate the experiment.

toplots <- data.frame();

for (ni in 1:length(ns)){

n <- ns[ni]

S_N <- rbinom(n=test_size,size=n,prob=p); # Simulating the experiment by drawing from a binomial distribution

Z_N <- (S_N-n*p)/sqrt(p*(1-p)*n)

Z <- rnorm(test_size)

toplot <- data.frame(x= c(Z_N,Z),

group=c(rep("# of Heads",test_size),rep("Normal Distribution",test_size)),

N=rep(n,test_size))

toplots <- rbind(toplots,toplot)

}

ggplot() + geom_density(data=toplots, aes(x=x,color=group)) +facet_wrap(~N,scales="free")+theme(legend.title = element_blank(), panel.background = element_blank(), legend.position = "bottom") + xlab("")

Clearly, the density of $Z_N$ is approaching that of a normal distribution. Can this help us in getting an exponential bound for the probability of the number of heads exceeding $\frac{3N}{4}$? After all, the tails of a Gaussian decay exponentially, so if our random variable of interest approaches a Gaussian, shouldn’t that be enough?

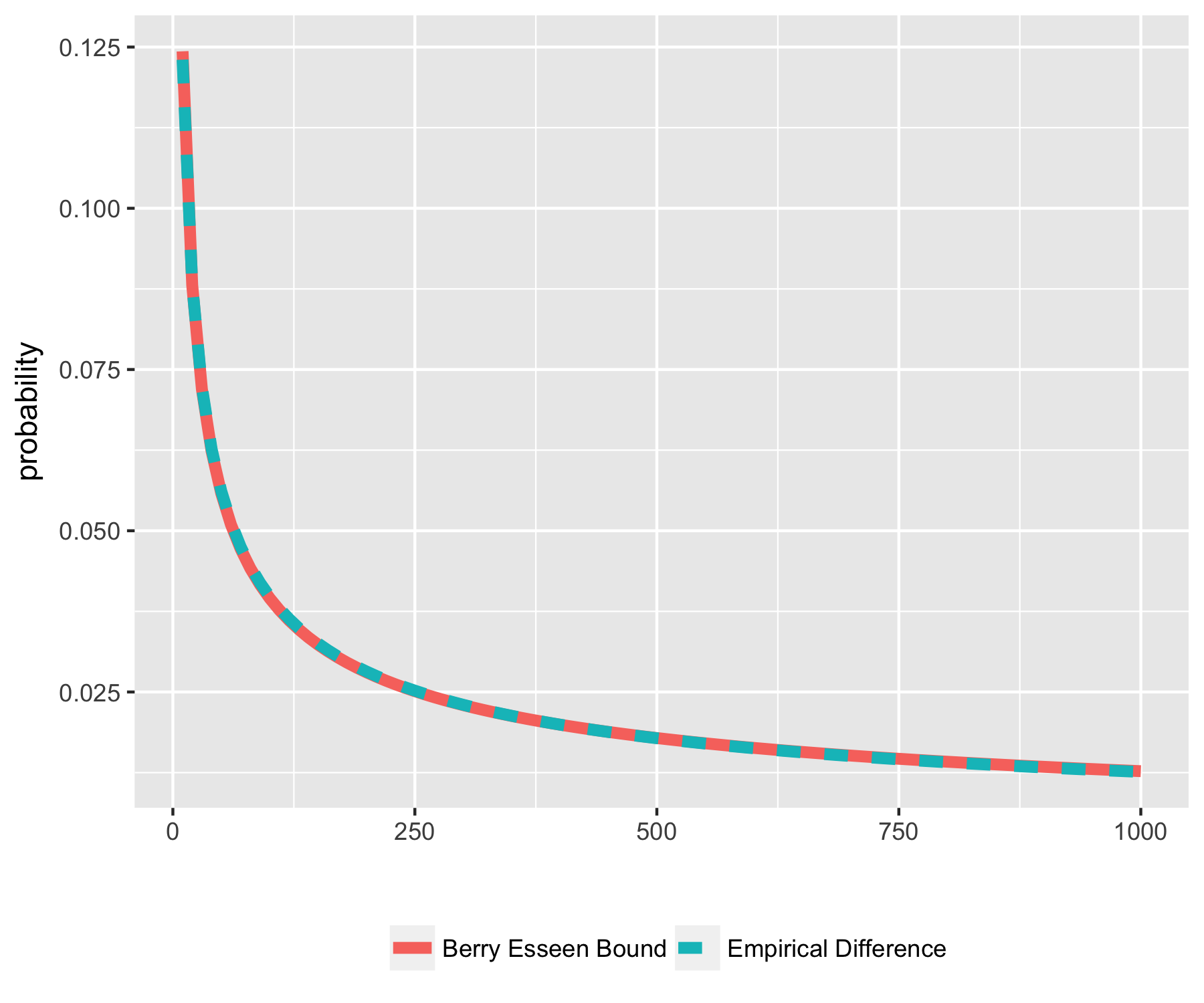

Interestingly, it turns out, this does not work at all! The problem is that the rate at which the $Z_N$ converges in distribution is not nearly fast enough. A quantitative version of the central limit theorem, the Berry-Esseen CLT, says that

where $Z \sim N(0,1)$ and $C$ is a constant that we won’t worry about here. The convergence is of order $1/\sqrt{N}$, so it can’t be used to obtain exponential decay. Furthermore, this estimate is sharp. Let’s see for ourselves in some simulated data

p=.5

ns = c(seq(from=10,to=1000, by=10))

empirical_diff <- rep(-1,length(ns))

test_size = 1000;

for (ni in 1:length(ns) ){

n <- ns[ni]

# We want to estimate P{Z_N > t}, and we know that S_N ~ binom(n,p). To use

# the CDF of S_N, we need to rescale t P{Z_N > t} = P{ S_N > t(\sqrt(p(1-p)N))+ Np}

ts <- seq(from=-3,to=3,by = 0.1)

ts_ <- ts*(sqrt(p*(1-p)*n))+n*p

Z_N_gt_t = 1-pbinom(ts_,size = n,prob=p)

g_gt_t = 1-pnorm(ts)

empirical_diff[ni] <- max(abs(Z_N_gt_t - g_gt_t)); # LHS of our inequality

}

berry_esseen <- predict(lm(empirical_diff ~ I(ns^-0.5))) # Let's fit a curve 1/sqrt(N) to compare

toplot <- data.frame(ns=c(ns,ns), probability= c(empirical_diff,berry_esseen),

group=c(rep("Empirical Difference",length(ns)),rep("Berry Esseen Bound",length(ns))))

ggplot(toplot, aes(x=ns, probability, color=group)) +geom_line(aes(linetype=group),size=2)+theme(legend.title = element_blank(), legend.position = "bottom") + xlab("")

The LHS of our inequality (in blue) decays exactly as $\sim\frac{1}{\sqrt{N}}$. This is really slow, much slower than exponential. So this is why we need concentration inequalities: CLT is not going to help us here.

Concentration Inequalities

In these notes, the bounds we will consider will increase in strength as they increase in the amount of information (i.e. assumptions) about the random variables (or sums of random variables) they are bounding. We begin with Markov Inequality, which uses only non-negativity of the random variable. Then we use it to prove Chebyshev, which uses the variance. By assuming independence, we obtain an exponential bound using Hoeffding. And finally, by taking into account the mean of our random variables, we obtain Chernoff.

Markov & Chebyshev

To get us warmed up, let’s start with the Markov inequality:

For any non-negative random variable $X$, \begin{align} \mathrm{P} \{ X \geq t \} \leq \frac{\mathrm {E} X}{t}\end{align}

Proof.

This is often a very weak bound, as it does not use anything we know about the random variable $X$. But, it is used in the proof of every other bound in these notes, such as Chebyshev inequality, which uses the variance of $X$ to obtain a concentration bound.

For any random variable $X$, \begin{align} \mathrm{P} \{ | X - \mathop{\mathrm E} X| \geq t \} \leq \frac{\mathrm {Var} (X)}{t^2}\end{align}

Proof.

Hoeffding’s Inequality

Hoeffding is going to give us the exponential bound for the deviation $\sum_i X_i$ that we are looking for. To do so, it makes a crucial assumption: independence.

To start off, we will prove a special case, where there variables $X_1,…,X_N$ are symmetric Bernoulli. This means that they take the values 1 or -1 with equal probability.

Proof.

With this theorem in hand, we can obtain bounds for our coin flipping problem. Let $Y_i = 2(X_i-\frac{1}{2})$, which is a symmetric binomial variable.

where the theorem was applied with $a = [1,1,…,1]$ so $\|a\|_{2}^2 = N$. So

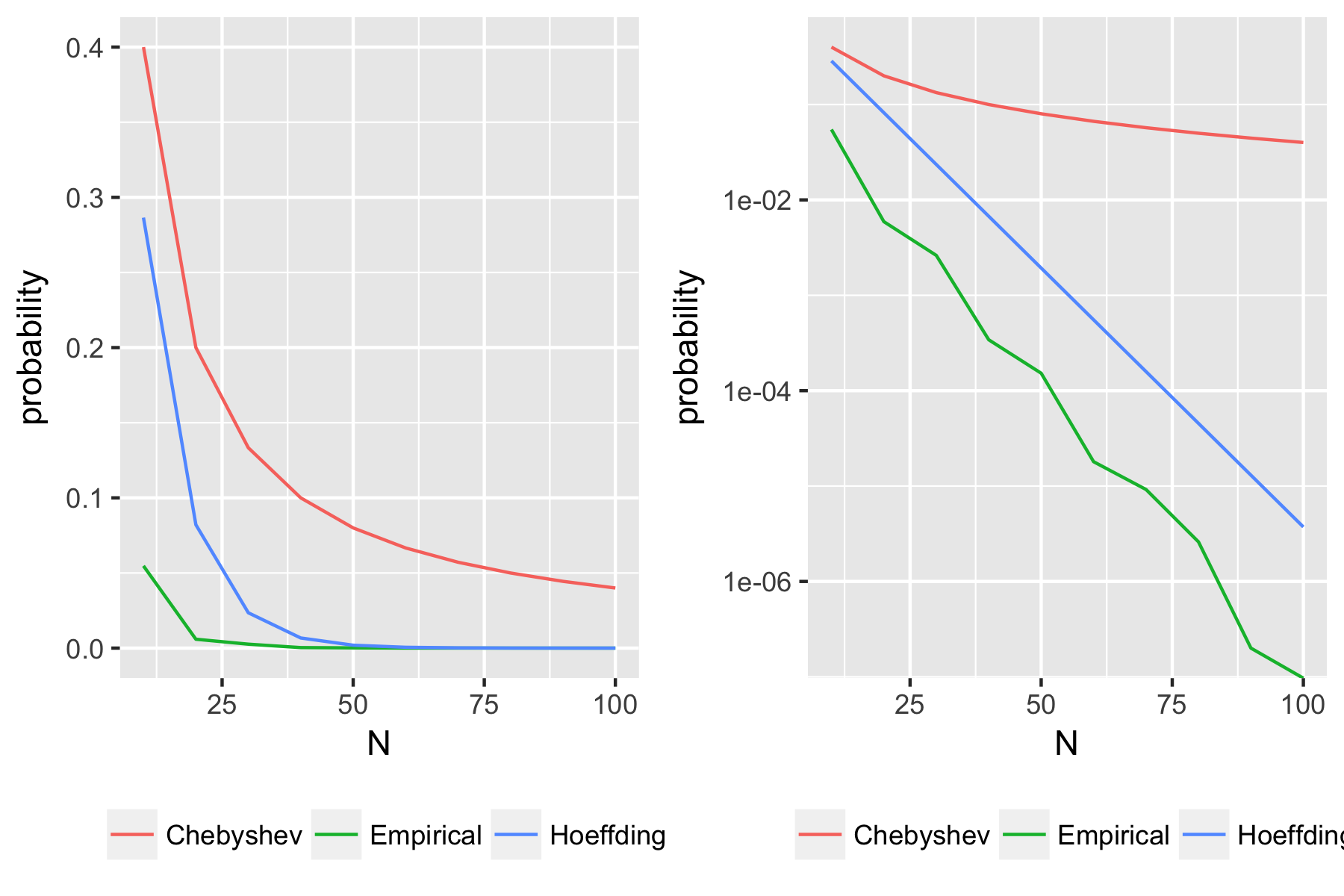

Simulating the coin flips, we see that Hoeffding’s Inequality gives the exponential bound that is observed from the simulation.

library(ggplot2)

library(patchwork) # devtools::install_github("thomasp85/patchwork")

p=.5

ns = c(seq(from=10,to=100, by=10))

chebyshev_upper_bounds <- 4/ns;

hoeffding_upper_bounds <- exp(-ns/8 )

empirical_upper_bounds <- rep(0,length(ns))

test_size = 1E7;

for (ni in 1:length(ns) ){

n <- ns[ni]

expvec <- rbinom(n=test_size,size=n,prob=p);

empirical_upper_bounds[ni] <- (sum(( expvec - n*p) > n/4 ))/test_size;

}

toplot <- data.frame(ns=c(ns,ns,ns), probability= c(empirical_upper_bounds, chebyshev_upper_bounds, hoeffding_upper_bounds),

group=c(rep("Empirical",length(ns)),rep("Chebyshev",length(ns)), rep("Hoeffding",length(ns))))

g_linear <- ggplot(toplot, aes(x=ns, probability, color=group)) + geom_line() +xlab("N")+ theme(legend.title = element_blank(), legend.position = "bottom")

g_log <- ggplot(toplot, aes(x=ns, probability, color=group)) + geom_line() + scale_y_log10() +xlab("N") + theme(legend.title = element_blank(), legend.position = "bottom")

g <- g_linear + g_log

We can prove a slightly more general statement for any bounded random variable.

Here is a nice exercise from Vershynin’s book that nicely demonstrates the utility of this inequality.

Solution.

Chernoff Bounds

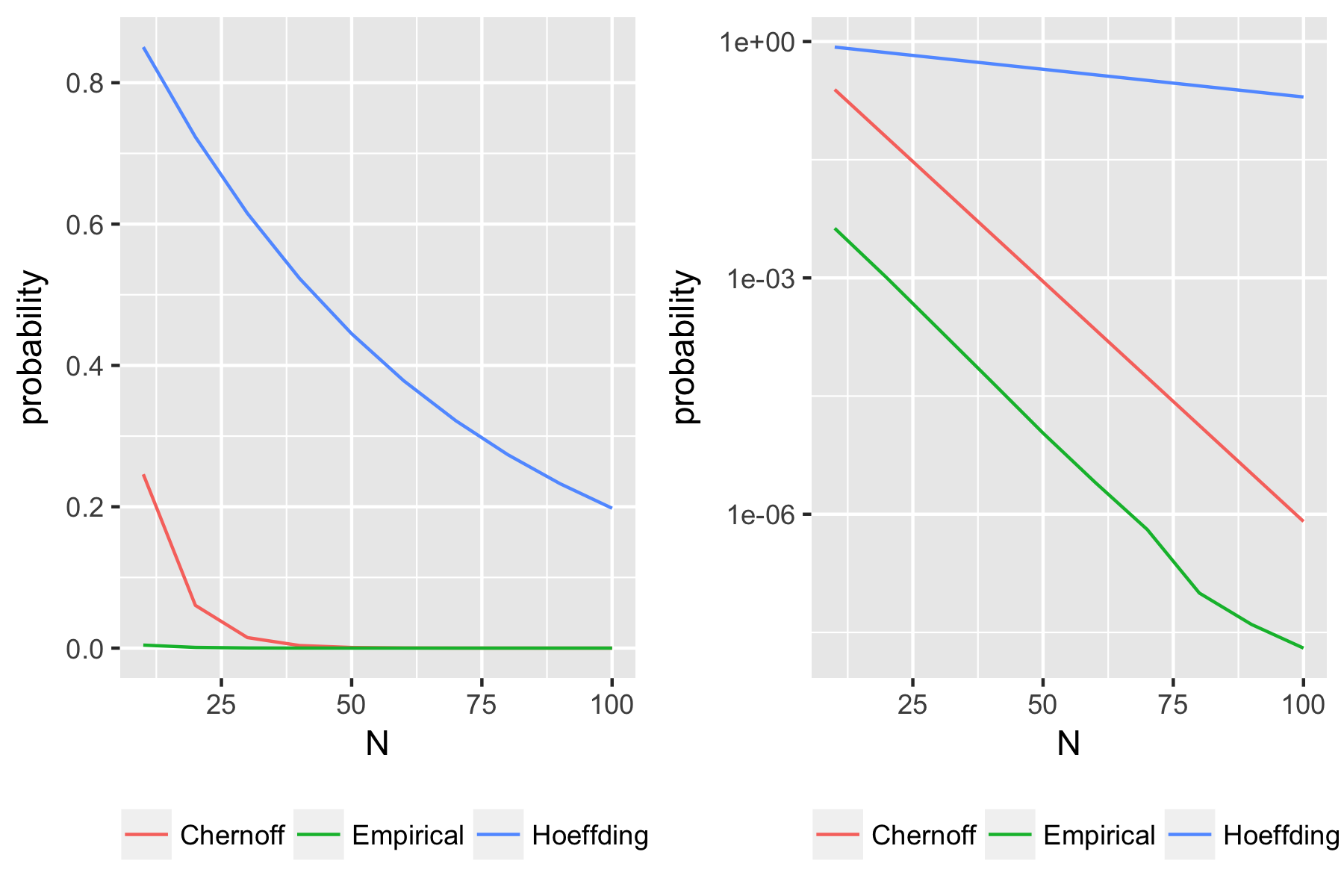

The Hoeffding bound for the Bernoulli random variable with $p=0.5$ was quite sharp. Let’s see what happens for small $p$. As before, let $X_i \sim \text{Bernoulli(p)}$ then $S_N = \sum_{i=1}^N X_i$. Suppose we need to bound the probability that $S_N > 10pN$

This is a bound for the probability that the Binomial random variable deviates from its mean by more than 9 times the mean. Intuitively, we would expect this to be highly improbable. Indeed, with $n=100$ and $p=0.5$, the bound above gives essentially zero. But with $p=0.01$, we get 0.2, which is completely useless! Hoeffding is just not enough for small $p$; we need something stronger.

Proof.

In our case, we have

require(ggplot2)

library(patchwork) # devtools::install_github("thomasp85/patchwork")

p=.01

ns = c(seq(from=10,to=100, by=10))

chernoff_upper_bounds <- exp(-p*ns)*((exp(1)/10))^(10*p*ns);

hoeffding_upper_bounds <- exp(-(2*(9*p)^2*ns) )

empirical_upper_bounds <- rep(0,length(ns))

test_size = 5E7;

for (ni in 1:length(ns) ){

n <- ns[ni]

expvec <- rbinom(n=test_size,size=n,prob=p);

empirical_upper_bounds[ni] <- (sum( expvec > 10*n*p ))/test_size;

}

toplot <- data.frame(ns=c(ns,ns,ns), probability= c(empirical_upper_bounds, chernoff_upper_bounds, hoeffding_upper_bounds),

group=c(rep("Empirical",length(ns)),rep("Chernoff",length(ns)), rep("Hoeffding",length(ns))))

g_linear <- ggplot(toplot, aes(x=ns, probability, color=group)) + geom_line() +xlab("N")+ theme(legend.title = element_blank(), legend.position = "bottom")

g_log <- ggplot(toplot, aes(x=ns, probability, color=group)) + geom_line() + scale_y_log10() +xlab("N") + theme(legend.title = element_blank(), legend.position = "bottom")

g <- g_linear + g_log

Now this is much better! By taking into account the means of $X_i$, we get a much tighter bound when those means are very small.

Final Notes

The primary reference for these notes is Prof. Roman Vershynin’s High Dimensional Probability, which I highly recommend. I also found these notes by Prof. John Duchi to be helpful. Also many thanks to Prof. Stefan Steinerberger and Prof. John Lafferty whose classes got me first interested in the topic.

These notes focused on sums of Bernoulli variables, but the field of concentration inequalities is remarkably deep. For example, please see Vershynin’s book for extensions of these inequalities for sub-Gaussian and sub-Exponential variables, which I did not write about here. Also, please contact me (Twitter or Email) for comments or questions!